Computers are everywhere today, from our laptops to tablets and to our cars. But how did we get here? How did computers evolve from bulky machines that filled entire rooms to sleek devices that fit in our pockets? This blog post invites you to explore pivotal moments through the influential vintage computers that have not only shaped the history of computing but have also left an indelible mark on the world.

The Early Days: Mechanical Calculators and Punched Cards

Our exploration into the roots of computing leads us to the 19th century, where inventors and mathematicians embarked on designing mechanical devices capable of calculations and data storage. One of the earliest examples of such a device was the abacus, a wooden rack with beads mounted on rods that could be used to perform arithmetic operations. The abacus is believed to have been invented by the Chinese around 4000 years ago.

Mechanical Calculator from 1950, Photo by Loren Biser

Another pivotal moment in computing history was the introduction of punched cards, a piece of paper or cardboard with holes punched in it to represent data or instructions. Punched cards were first used by Joseph Marie Jacquard, a French merchant and inventor, who invented a loom that used punched wooden cards to automatically weave fabric designs in 1801. Punched cards would later be used by many early computers to store and process information.

The Analytical Engine, a visionary project by Charles Babbage in the 1830s, aimed to be a steam-powered mechanical marvel capable of performing diverse calculations using punched cards. Although it was never fully built, due to the lack of funding and technology at the time, but inspired many future generations of computer scientists and engineers. One of the most notable admirers of Babbage’s work was Ada Lovelace, an English mathematician and the daughter of poet Lord Byron, who wrote the world’s first computer program for the Analytical Engine in 1848. Lovelace is widely regarded as the first computer programmer and a pioneer of computer science.

The Rise of Electronic Computers: Vacuum Tubes and Transistors

The 20th century saw a rapid development of electronic technology, which enabled the creation of more powerful and efficient computers. One of the key inventions that made electronic computing possible was the vacuum tube, a glass tube that contained a filament, a plate, and a grid, and could amplify electric signals or act as a switch. Vacuum tubes were first used in radios and televisions, but they soon found their way into computers as well.

One of the first electronic computers to use vacuum tubes was the Electronic Numerical Integrator and Computer (ENIAC), a massive machine that occupied a 1,800-square-foot (167-square-meter) room and weighed 30 tons (27 metric tons). The ENIAC was built by John Mauchly and J. Presper Eckert, two American engineers, at the University of Pennsylvania, and was completed in 1946. The ENIAC was designed to perform complex calculations for military purposes, such as calculating artillery firing tables and atomic bomb simulations.

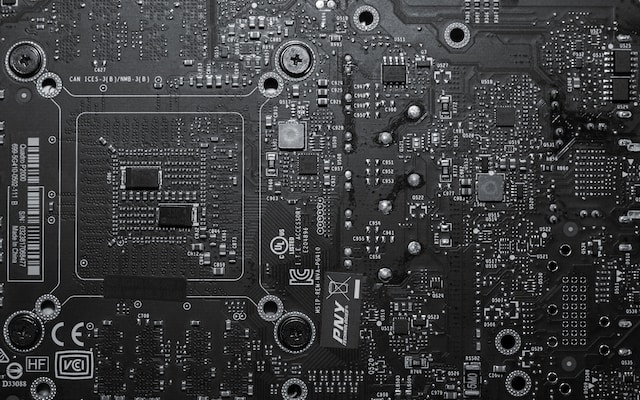

Photo by Albert Stoynov

The ENIAC was soon surpassed by other electronic computers that used more advanced and reliable technologies, such as the transistor, a semiconductor device that could amplify or switch electric signals, and the magnetic core memory, a type of random-access memory that used tiny rings of magnetized material to store bits of data. The transistor was invented by John Bardeen, Walter Brattain, and William Shockley, three American physicists, at Bell Labs in 1947, and won them the Nobel Prize in Physics in 1956. The transistor was much smaller, cheaper, faster, and more durable than the vacuum tube, and allowed the development of smaller and more powerful computers, such as the IBM 701, the first mass-produced computer, and the UNIVAC I, the first commercially successful computer, both introduced in 19512. The magnetic core memory was invented by Jay Forrester, an American engineer, at MIT in 1951, and improved the speed and capacity of computer memory, as well as reduced the power consumption and heat generation. The magnetic core memory was used by many computers in the 1950s and 1960s, such as the IBM 704, the first computer to use floating-point arithmetic, and the SAGE, a network of computers that provided air defense for the United States and Canada.

The Era of Personal Computers: Microprocessors and Integrated Circuits

The 1970s and 1980s marked the beginning of the era of personal computers, which were smaller, cheaper, and more accessible than the previous generations of computers. The key innovation that enabled the emergence of personal computers was the microprocessor, a single chip that contained the entire central processing unit (CPU) of a computer. The microprocessor was invented by Ted Hoff, Federico Faggin, and Stan Mazor, three American engineers, at Intel in 1971, and was first used in the Intel 4004, a 4-bit microprocessor that powered the Busicom 141-PF, a Japanese calculator. The microprocessor was soon followed by the integrated circuit, a set of electronic components that were miniaturized and embedded on a single piece of silicon. The integrated circuit was invented by Jack Kilby, an American engineer, at Texas Instruments in 1958, and by Robert Noyce, an American physicist, at Fairchild Semiconductor in 1959, and won them the Nobel Prize in Physics in 2000. The integrated circuit allowed the development of more complex and powerful microprocessors, such as the Intel 8080, an 8-bit microprocessor that powered the Altair 8800, one of the first personal computers, and the Motorola 68000, a 16-bit microprocessor that powered the Apple Macintosh, one of the most popular personal computers.

The personal computer revolution was also driven by the emergence of new software and hardware platforms that made computing more user-friendly and versatile. One of the most influential software platforms was the operating system, a program that managed the basic functions of a computer, such as memory, input/output, and file management. One of the first operating systems for personal computers was CP/M, developed by Gary Kildall, an American computer scientist, at Digital Research in 1974. CP/M was compatible with many different microprocessors and hardware devices, and supported many applications, such as word processors, spreadsheets, and databases. Another important operating system was MS-DOS, developed by Microsoft, a company founded by Bill Gates and Paul Allen, two American entrepreneurs, in 1975. MS-DOS was based on QDOS, a clone of CP/M, and was licensed by IBM for its IBM PC, the first mass-market personal computer, launched in 1981. MS-DOS became the dominant operating system for personal computers in the 1980s, and paved the way for the development of Windows, a graphical user interface that made computing more intuitive and appealing.

Another key software platform that shaped the history of computing was the programming language, a set of symbols and rules that allowed humans to communicate with computers and create software. One of the first programming languages for personal computers was BASIC, developed by John Kemeny and Thomas Kurtz, two American computer scientists, at Dartmouth College in 1964. BASIC was designed to be easy to learn and use and was widely adopted by hobbyists and educators. Another influential programming language was C, developed by Dennis Ritchie, an American computer scientist, at Bell Labs in 1972. C was a powerful and portable language that could run on many different platforms and operating systems and was used to create many important software, such as the UNIX operating system, the Linux operating system, and the Python programming language.

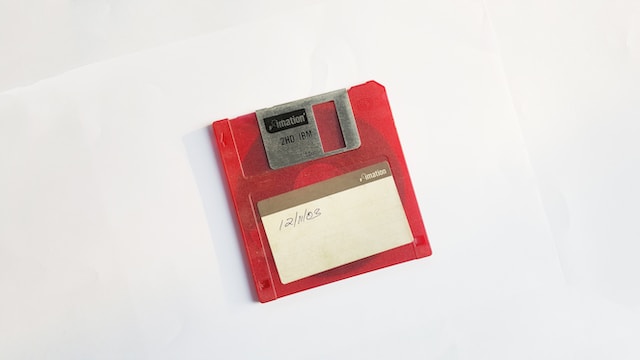

Photo by Fredy Jacob

The personal computer revolution was also fueled by the development of new hardware devices that expanded the capabilities and functionality of computers. One of the most important hardware devices was the floppy disk, a thin, flexible disk that could store and transfer data. The floppy disk was invented by Alan Shugart, an American engineer, at IBM in 1971 and was first used in the IBM 3740, a data entry system. The floppy disk was later improved by Sony, a Japanese company, which introduced the 3.5-inch floppy disk, a smaller and more durable disk that could store up to 1.44 megabytes of data, in 1981. The floppy disk was widely used by personal computers in the 1980s and 1990s and was replaced by the CD-ROM, a compact disc that could store and read data using a laser, in the late 1990s and early 2000s.

Apart from the Floppy disk, another essential hardware device was the mouse, a pointing device that allowed users to interact with graphical user interfaces on the screen. The mouse was invented by Douglas Engelbart, an American computer scientist at Stanford Research Institute in 1964, and was first used in the oN-Line System (NLS), a pioneering computer system that featured hypertext, video conferencing, and collaborative editing. The mouse was later popularized by Apple, a company founded by Steve Jobs and Steve Wozniak, two American entrepreneurs, in 1976, which introduced the Apple Lisa, the first personal computer with a graphical user interface, and the Apple Macintosh, the first mass-market personal computer with a graphical user interface, both in 1984.

The personal computer revolution also benefited from the emergence of new media and communication platforms that connected computers and users around the world. One of the most influential media platforms was the video game, a type of interactive entertainment that used graphics, sound, and input devices to create immersive and engaging experiences. One of the first video games for personal computers was Spacewar! developed by Steve Russell, an American computer scientist, at MIT in 1962. Spacewar! was a two-player game that involved piloting spaceships and shooting at each other. Spacewar! was widely played and copied by many computer enthusiasts and programmers and inspired many other video games, such as Pong, the first commercially successful arcade game, developed by Atari, a company founded by Nolan Bushnell and Ted Dabney, two American entrepreneurs, in 1972. Video games became a popular and profitable industry in the 1970s and 1980s, with the emergence of many genres, such as adventure, role-playing, strategy, simulation, shooter, and platformer, and many platforms, such as consoles, handhelds, arcades, and personal computers.

Another key communication platform that shaped the history of computing was the Internet, a global network of interconnected computers that used a standard protocol to exchange data and information. The Internet was born out of the ARPANET, a network of computers funded by the U.S. Department of Defense and developed by various universities and research institutions in the 1960s and 1970s.

The ARPANET was the first network to use the TCP/IP protocol, a set of rules that defined how data packets were transmitted and routed across different networks. The TCP/IP protocol was developed by Vinton Cerf and Robert Kahn, two American computer scientists, in 1974, and became the standard for the Internet in 1983. The Internet grew rapidly in the 1980s and 1990s, with the development of many services and applications, such as email, Usenet, FTP, Telnet, Gopher, IRC, and WWW. The WWW, or the World Wide Web, was a system that allowed users to access and share information using hypertext documents and links. The WWW was invented by Tim Berners-Lee, a British computer scientist, at CERN in 1989, and was made public in 1991. The WWW revolutionized the Internet and made it more accessible and user-friendly, with the help of web browsers, such as Mosaic, Netscape, Internet Explorer, and Firefox, and web servers, such as Apache, IIS, and Nginx.

The Future of Computers: Quantum Computing and Beyond

The 21st century has witnessed the emergence of a new paradigm in the history of computing, namely quantum computing, which uses the principles of quantum mechanics, such as superposition, entanglement, and interference, to manipulate and process information. Quantum computing has the potential to solve problems that are intractable or impossible for classical computers, such as cryptography, optimization, simulation, machine learning, and artificial intelligence.

Quantum computing is still in its infancy, and faces many challenges and limitations, such as the scalability, reliability, coherence, and error correction of qubits and quantum systems. However, quantum computing has also made significant progress and breakthroughs in the 21st century, with the development of new technologies and applications, such as the quantum computer, quantum algorithm, quantum cryptography, and quantum internet.

The future of computing is not only about quantum computing, but also about other emerging and innovative technologies and paradigms, such as the neuromorphic computing, a type of computing that mimics the structure and function of the human brain, the DNA computing, a type of computing that uses the molecules of life to store and process information, the optical computing, a type of computing that uses light to transmit and manipulate information, and the biological computing, a type of computing that uses living organisms to perform computations.

The future of computing is also about the social and ethical implications and challenges of computing, such as the digital divide, the gap between those who have access to and benefit from computing and those who do not, the cybersecurity, the protection of data and information from unauthorized access and misuse, the privacy, the right of individuals and groups to control their own data and information, and the responsibility, the accountability and morality of computing and its users.

Wrap-Up

The history of computing is a fascinating and inspiring story of human ingenuity, curiosity, and collaboration. From the abacus to the quantum computer, from the ENIAC to the quantum internet, from the punched card to the qubit, computing has transformed our lives and world in countless ways. Computing is not only a science and a technology but also an art and a culture that reflects our values, aspirations, and challenges. As we enter the new era of computing, we should celebrate the achievements and innovations of the past and look forward to the opportunities and possibilities of the future.